00079-Hugging Face 杂项-windows10

前言

Hugging Face 的官网地址为:https://huggingface.co/ 。

镜像网站: https://hf-mirror.com/

操作系统:Windows 10 专业版

参考文档

镜像网站

源教程地址: https://blog.csdn.net/mar1111s/article/details/137179180 .

设置环境变量方法如下:

1 | import os |

Linux 运行下面的命令或者将其写入 ~/.bashrc:

1 | export HF_ENDPOINT=https://hf-mirror.com |

保存模型

源教程地址: https://huggingface.co/docs/transformers/quicktour#save-a-model .

Once your model is fine-tuned, you can save it with its tokenizer using PreTrainedModel.save_pretrained():

1 | pt_save_directory = "./pt_save_pretrained" |

When you are ready to use the model again, reload it with PreTrainedModel.from_pretrained():

1 | pt_model = AutoModelForSequenceClassification.from_pretrained("./pt_save_pretrained") |

缓存设置

源教程地址: https://huggingface.co/docs/transformers/installation#cache-setup .

Pretrained models are downloaded and locally cached at: ~/.cache/huggingface/hub. This is the default directory given by the shell environment variable TRANSFORMERS_CACHE. On Windows, the default directory is given by C:\Users\username\.cache\huggingface\hub. You can change the shell environment variables shown below - in order of priority - to specify a different cache directory:

- Shell environment variable (default):

HUGGINGFACE_HUB_CACHEorTRANSFORMERS_CACHE. - Shell environment variable:

HF_HOME. - Shell environment variable:

XDG_CACHE_HOME+/huggingface.

离线使用

源教程地址: https://huggingface.co/docs/transformers/installation#fetch-models-and-tokenizers-to-use-offline .

Another option for using 🤗 Transformers offline is to download the files ahead of time, and then point to their local path when you need to use them offline. There are three ways to do this:

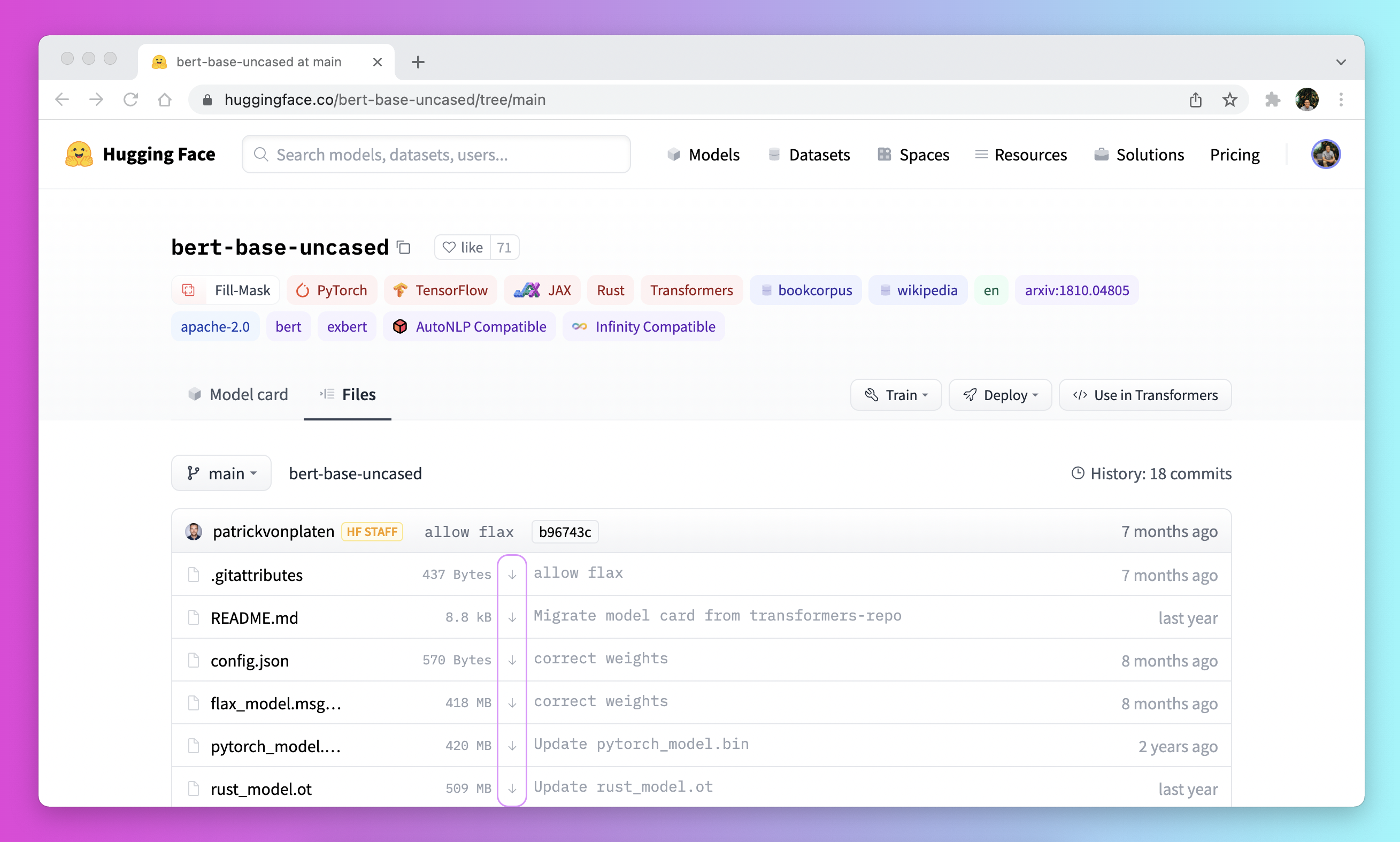

- Download a file through the user interface on the

Model Hubby clicking on the↓icon.

-

Use the

PreTrainedModel.from_pretrained()andPreTrainedModel.save_pretrained()workflow:-

Download your files ahead of time with

PreTrainedModel.from_pretrained():1

2

3

4from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("bigscience/T0_3B")

model = AutoModelForSeq2SeqLM.from_pretrained("bigscience/T0_3B") -

Save your files to a specified directory with

PreTrainedModel.save_pretrained():1

2tokenizer.save_pretrained("./your/path/bigscience_t0")

model.save_pretrained("./your/path/bigscience_t0") -

Now when you’re offline, reload your files with

PreTrainedModel.from_pretrained()from the specified directory:1

2tokenizer = AutoTokenizer.from_pretrained("./your/path/bigscience_t0")

model = AutoModel.from_pretrained("./your/path/bigscience_t0")

-

-

Programmatically download files with the

huggingface_hublibrary:-

Install the

huggingface_hublibrary in your virtual environment:1

python -m pip install huggingface_hub

-

Use the

hf_hub_downloadfunction to download a file to a specific path. For example, the following command downloads theconfig.jsonfile from theT0model to your desired path:1

2

3from huggingface_hub import hf_hub_download

hf_hub_download(repo_id="bigscience/T0_3B", filename="config.json", cache_dir="./your/path/bigscience_t0")

-

Once your file is downloaded and locally cached, specify it’s local path to load and use it:

1 | from transformers import AutoConfig |

See the How to download files from the Hub section for more details on downloading files stored on the Hub.

Github Issues

‘_datasets_server’ from ‘datasets.utils’

source link: https://github.com/modelscope/modelscope/issues/836

安装 datasets:

1 | $ pip install datasets==2.18.0 |

结语

第七十九篇博文写完,开心!!!!

今天,也是充满希望的一天。