前言

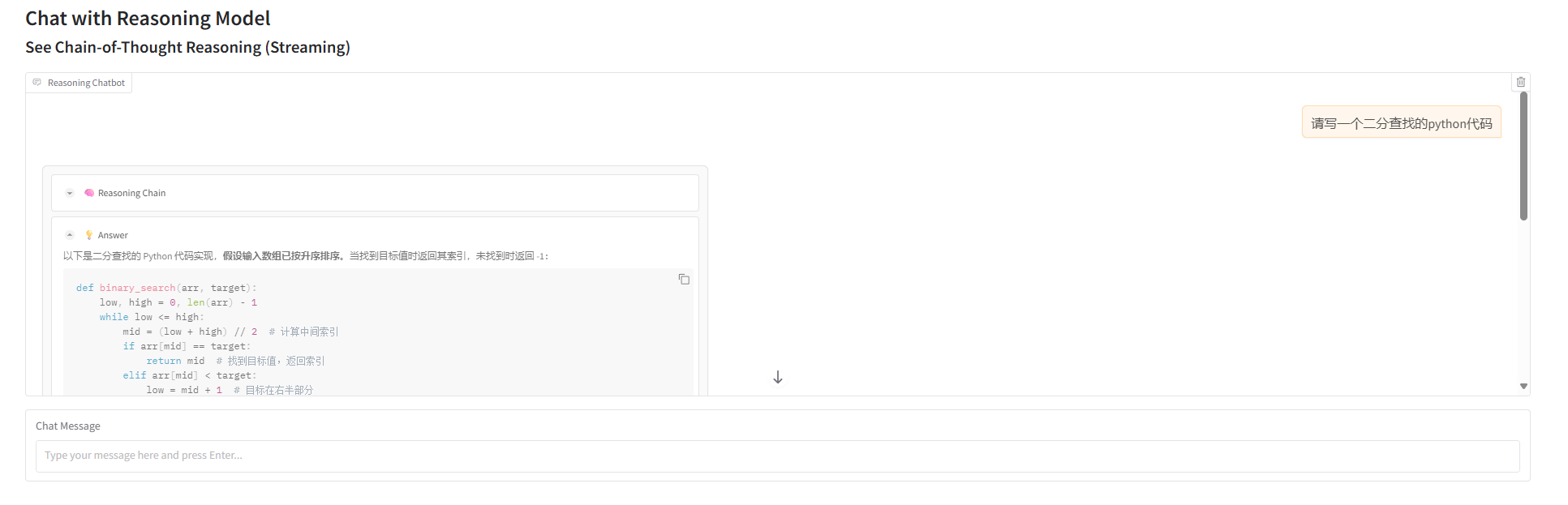

推理模型 gradio 示例。

Operating System: Ubuntu 22.04.4 LTS

参考文档

脚本

import os

import gradio as gr

from gradio import ChatMessage

from openai import OpenAI

openai_api_key: str = os.environ.get("OPENAI_DEEPSEEK_API_KEY")

openai_api_base: str = os.environ.get("OPENAI_DEEPSEEK_API_BASE")

client: OpenAI = OpenAI(

api_key=openai_api_key,

base_url=openai_api_base,

)

model: str = "deepseek-r1-250528"

def stream_reasoning_response(user_message: str, messages: list):

"""

流式展示reasoning_content和content

"""

chat_history: list[dict[str, str]] = []

for msg in messages:

if msg["role"] == "user":

chat_history.append({"role": "user", "content": msg["content"]})

elif msg["role"] == "assistant":

chat_history.append({"role": "assistant", "content": msg["content"]})

chat_history.append({"role": "user", "content": user_message})

reasoning_buffer: str = ""

answer_buffer: str = ""

reasoning_message_index: int = None

answer_message_index: int = None

messages.append(

ChatMessage(

role="assistant",

content="",

metadata={"title": "🧠 Reasoning Chain"}

)

)

reasoning_message_index = len(messages) - 1

messages.append(

ChatMessage(

role="assistant",

content="",

metadata={"title": "💡 Answer"}

)

)

answer_message_index = len(messages) - 1

stream = client.chat.completions.create(model=model, messages=chat_history, stream=True)

for chunk in stream:

reasoning_content: str = None

content: str = None

if hasattr(chunk.choices[0].delta, "reasoning_content"):

reasoning_content: str = chunk.choices[0].delta.reasoning_content

elif hasattr(chunk.choices[0].delta, "content"):

content: str = chunk.choices[0].delta.content

updated = False

if reasoning_content is not None:

reasoning_buffer += reasoning_content

messages[reasoning_message_index] = ChatMessage(

role="assistant",

content=reasoning_buffer,

metadata={"title": "🧠 Reasoning Chain"}

)

updated = True

if content is not None:

answer_buffer += content

messages[answer_message_index] = ChatMessage(

role="assistant",

content=answer_buffer,

metadata={"title": "💡 Answer"}

)

updated = True

if updated:

yield messages

def user_message(msg, chat_history):

chat_history.append(ChatMessage(role="user", content=msg))

return "", chat_history

with gr.Blocks() as demo:

gr.Markdown("# Chat with Reasoning Model<br><small>See Chain-of-Thought Reasoning (Streaming)</small>")

chatbot = gr.Chatbot(

type="messages",

label="Reasoning Chatbot",

render_markdown=True,

)

input_box = gr.Textbox(

lines=1,

label="Chat Message",

placeholder="Type your message here and press Enter..."

)

msg_store = gr.State("")

input_box.submit(

lambda msg: (msg, msg, ""),

inputs=[input_box],

outputs=[msg_store, input_box, input_box],

queue=False

).then(

user_message,

inputs=[msg_store, chatbot],

outputs=[input_box, chatbot],

queue=False

).then(

stream_reasoning_response,

inputs=[msg_store, chatbot],

outputs=chatbot

)

demo.launch()

# 请写一个二分查找的python代码效果

结语

第三百四十七篇博文写完,开心!!!!

今天,也是充满希望的一天。